From One AI to Many: Why the Future Belongs to Purpose-Built Agents

The future isn’t a single “company chatbot”. The future is many agents, each with a clear job, creating clarity and trust.

For the past two years, most companies have experimented with AI the same way: one chatbot, connected to “everything”, answering “anything”.

At first, it feels magical.

Then reality hits.

- Answers become vague.

- Permissions get blurry.

- Trust erodes.

- And teams quietly stop using it.

The problem isn’t AI.

The problem is the idea that one agent can serve everyone.

Work Is Specialized. AI Should Be Too.

Modern organizations don’t operate as one brain.

They’re made of:

- Engineering teams shipping code

- HR teams managing people and policies

- Support teams helping customers

- Sales and Ops teams running the business

Each team:

- Uses different tools

- Owns different knowledge

- Has different risks

- Needs different answers

So why would they all share the same AI?

The future isn’t a single “company chatbot”.

The future is many agents, each with a clear job.

What an Agent Really Is (And Isn’t)

An agent is not just a prompt.

And it’s definitely not “ChatGPT with more context”.

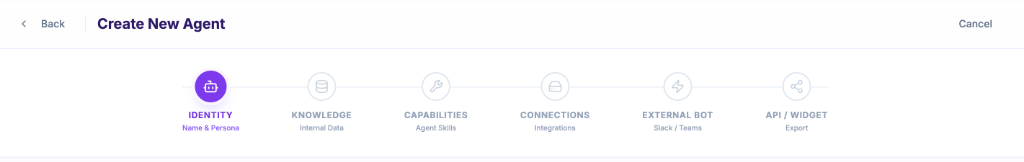

A real agent has:

- ✓ A clear identity (who it is, what it’s responsible for)

- ✓ Explicit knowledge boundaries

- ✓ Specific integrations

- ✓ Limited, intentional capabilities

- ✓ Defined places where it operates

An agent is software with responsibility.

The Shift: From Generic AI to Agent Systems

This is the shift we’re seeing across the best teams:

This is not about adding complexity.

It’s about aligning AI with how work actually happens.

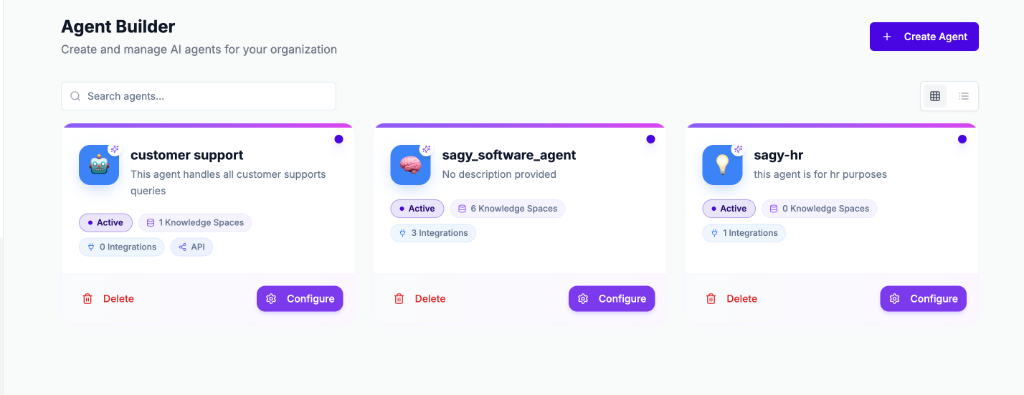

Three Agents. Three Worlds. Zero Confusion.

Let’s make this concrete.

1 The Software Engineering Agent

Purpose: Help engineers move faster without losing context.

Access

- • Jira

- • Confluence

- • GitLab

- • Engineering knowledge spaces

Where it lives

- • Slack

- • Internal API for dev tools

What it does

Explains tickets and architecture decisions. Answers “why was this built this way?”. Helps onboard new developers. Surfaces past discussions and tradeoffs.

This agent speaks engineering. Nothing else.

2 The HR Agent

Purpose: Be the single source of truth for people operations.

Access

- • Notion (HR workspace only)

- • Google Drive (HR folders)

Where it lives

- • Microsoft Teams

- • Internal HR portal

What it does

Answers HR policies and procedures. Supports onboarding and internal questions. Reduces repetitive HR interruptions. Keeps sensitive data isolated.

This agent doesn’t know about code. And that’s a feature, not a limitation.

3 The Customer Support Agent

Purpose: Help customers instantly — with approved information only.

Access

- • A knowledge space containing public documentation

Where it lives

- • Website chat widget

- • Public API

What it does

Answers customer questions. Reduces ticket volume. Ensures consistency with official docs.

This agent never improvises. It answers only from what’s meant to be public.

Why This Model Works

Because clarity creates trust.

When users know:

- What an agent knows

- What it doesn’t know

- Where answers come from

They stop second-guessing. They stop double-checking. They start relying on it.

That’s when AI stops being a demo — and becomes infrastructure.

Agents Need Feedback Loops, Not Just Conversations

A serious agent doesn’t just answer questions. It learns from gaps.

That’s why each agent needs its own dashboard:

- Unanswered questions

- Repeated topics

- Knowledge gaps

- Usage patterns

This turns agents into:

- Documentation signals

- Process improvement tools

- Living reflections of how teams actually work

The Big Idea: AI That Respects Structure

The next generation of AI at work won’t be louder.

It won’t be more autonomous for the sake of it.

It won’t try to replace teams.

It will be:

Not one AI.

But many.

Each doing one job well.

That’s how AI becomes useful, durable, and adopted — not just impressive.